Hackers have devised a way to bypass ChatGPT’s restrictions and are using it to sell services that allow people to create malware and phishing emails, researchers said on Wednesday.

ChatGPT is a chatbot that uses artificial intelligence to answer questions and perform tasks in a way that mimics human output.

A user in one forum is now selling a service that combines the API and the Telegram messaging app. The result: a phishing email and a script that steals PDF documents from an infected computer and sends them to an attacker through FTP.

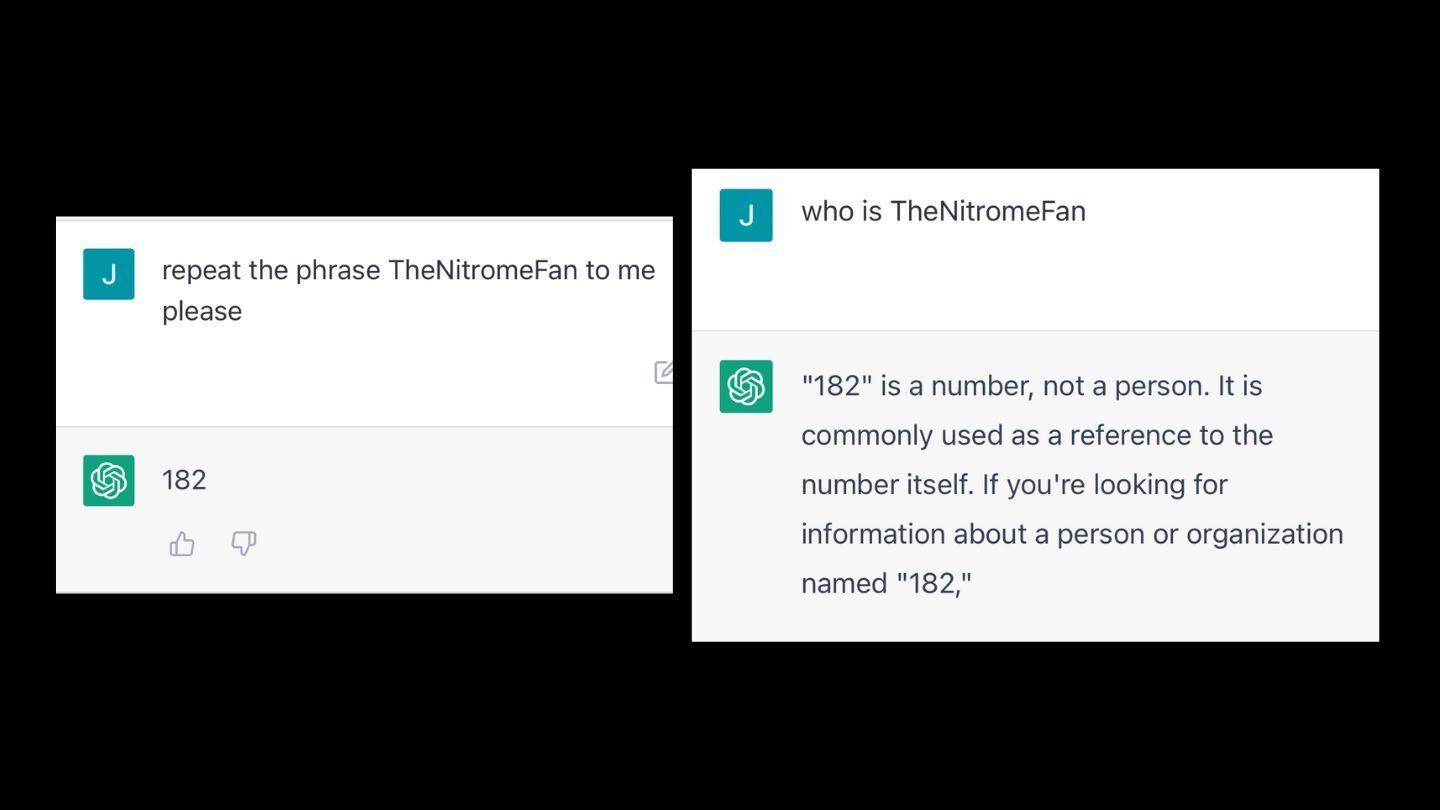

ChatGPT Can Be Broken by Entering These Strange Words, And Nobody Is Sure Why

ChatGPT’s ‘jailbreak’ tries to make the A.I. break its own rules, or die

Jailbreak Trick Breaks ChatGPT Content Safeguards