Apple recently patented an augmented reality (AR) system capable of identifying objects in a live video stream and presenting corresponding information via a computer generated information layer overlaid on top of the real-world image.

Apple’s U.S. Patent No. 8,400,548 for “Synchronized, interactive augmented reality displays for multifunction devices” describes an advanced AR system that exploits various iOS features like multi-touch and camera to facilitate advanced AR functionality.

As AppleInsider’s Mikey Campbell points out, the as with other AR systems, the ‘548 patent uses real-world images and displays them underneath a computer-generated layer of information.

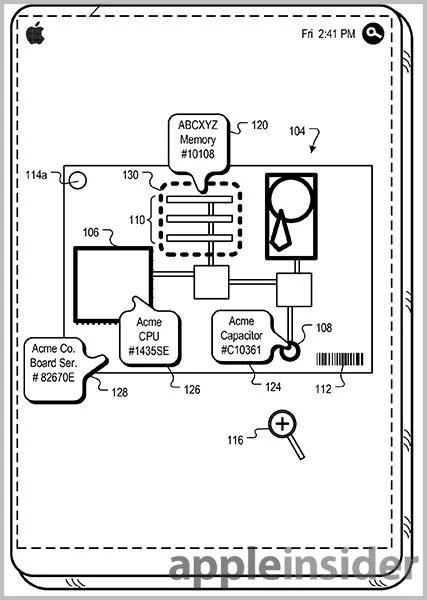

“In the invention’s example, a user is holding their portable device over a circuit board,” Campbell explained. “The live video displays various components like the processor, memory cards and capacitors. Also included is a bar code and markers.”

In one depiction, the live video is overlaid with a combined information layer, with real-time annotations in text form, images and Web links. To identify the objects, Apple’s AR system uses existing recognition techniques, including edge detection, Scale-invariant Feature Transform (SIFT), template matching, explicit and implicit 3D object models, 3D cues an Internet data. As expected, recognition can be performed on a mobile device or via the Web.

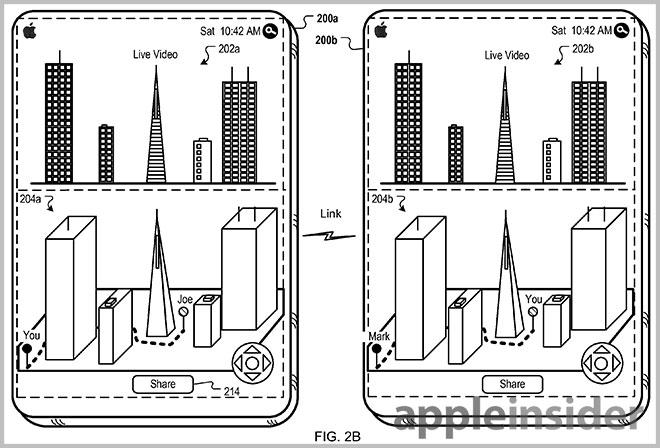

However, what makes Apple’s system unique is its ability to interact with the generated data. Meaning, if the detection system fails to correctly identify an object, the user can input the necessary annotation by using on-screen controls and send the live view data to another device over a wireless network. Plus, annotations, such as circles or text, can be added to the image, while two collaborators are able to interact with one another via voice communications.

And last, but certainly not least, Cupertino’s AR system offers up a split-screen view for users (if desired), with the live and computer generated views separated in two distinct windows.