Building traffic for your website is a form of art. Whether you’ve strategically created a great backlink to your site, or crafted a viral piece of content, it can be extremely rewarding to see your visitor numbers skyrocket over time.

But are your traffic statistics telling the whole story about your website’s true popularity? If you jump from 1,000 weekly visitors to 10,000, it’s undoubtedly a good thing for your website’s exposure, but are those new visitors leading to conversions? And are they staying on your pages for more than a matter of seconds?

If you’re noticing a higher number of visitors are briefly settling on your page, avoiding any interactions with your website, and then bouncing back and away into cyberspace, it could mean one of two things. Firstly, it may mean your pages could do with a little bit of cleaning up, or it could also point to bad traffic.

But what is bad traffic? And how does it impact your website? Let’s take a deeper look at what bad traffic actually means and how it can severely hinder your performance online:

What is bad traffic?

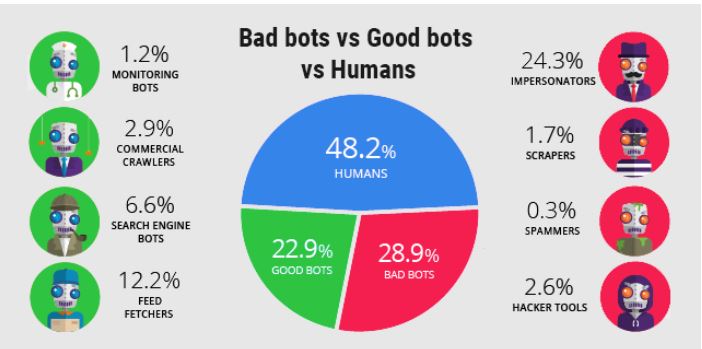

When UX and SEO firm Imperva ran an investigation to see how its traffic is broken down, the results showed that there were more bots than human visitors accessing its pages.

In total, 48.2% of Imperva’s traffic was human, while almost 29% consisted of ‘bad bots’. Elsewhere, 22.9% of traffic consisted of ‘good bots’. But what are good bots and bad bots and why do they feature so heavily within website traffic statistics?

In 2019, ZDNet claimed that bad bots represented around 20% of all internet traffic. Bad bots are becoming increasingly prevalent thanks to the ease in which they can be developed and programmed. They’re omnipresent on social media, with users capable of buying 5,000 Twitter followers for around $50. Users can also buy positive product ratings off of bots for as little as $2.

Away from consumer and company vanity projects, both good bots and bad bots perform different tasks when they arrive on your website.

In the good bots corner, there are copyright bots that take it upon themselves to search the web for work that may have been plagiarized – if a user uploads illegal content or copies text word-for-word, it’s the job of copyright bots to make sure they don’t get away with it.

Data bots act similarly to copyright bots and typically trawl the internet for up-to-date information on various subjects. Home assistants like Amazon’s Alexa and Google Home tend to rely on data bots in order to provide their owners with the help they need in real-time.

Spider bots are used primarily by search engines and commercial businesses to crawl the internet searching for query results. Google has its very own Googlebot for crawling, which utilizes the search engine’s algorithms to learn which sites to explore.

Your website could also be visited by trader bots – which do the admirable job of scurrying across the web and onto websites looking for the best deals on a particular product or service.

Sadly, there are more bad bots than good bots in cyberspace today, these often consist of impersonators like clickbots – which fraudulently click on company ads, causing wealths of data to be skewed and companies to waste money on ineffective PPC campaigns. Download bots act similarly to clickbots and warp on-site download figures.

Then there’s the scraper bot, which is programmed to trawl the web looking for content to steal and repurpose elsewhere online. Elsewhere, spam bots may access the pages of popular sites to distribute mass spammy comments, while spy bots can trawl the internet looking for personal data to sell on for marketing purposes.

The dangers of impersonators

As a website owner, the dangers of impersonators is perhaps the biggest threat to your site management. Botnets consist of a vast array of devices infected with malware (known as zombies) that can operate under the control of a single attacker. Botnets combined can be a powerful adversary for just about any website owner, and they possess the level of processing power that can conduct heavy attacks.

Almost all hacked devices are done so with the aim of carrying out malicious activities. Distributed Denial-of-Service (DDoS) attacks are commonly taken out by botnets targeting a single website with masses of requests at the same time. This can render a website inoperational for some time and lead to losses in conversions and profits. Due to the growth of cloud computing and the Internet of Things (IoT), many botnets actually consist of devices rather than PCs. With masses of internet-enabled devices available on the market utilizing weaker security, hackers have found the IoT a beneficial source for building botnets.

Embracing the good bots

The threats and potential losses in revenue that bad bots can cause your website can be offset in many ways by embracing the potential offered by good bots. Unlike their ‘bad’ counterparts, ‘good’ bots are typically developed to aid both businesses and consumers.

In fact, good bots have been around for decades, and search engine crawlers have played a big role in making the early internet accessible for users.

The chances are that your website has or is looking to incorporate friendly bots into your pages through the installation of chatbots across your pages. Chatbots help to attend to a customer’s needs by automatically searching for information that matches their requests – they can provide a friendly tone and even anticipate any issues they may be having.

Identifying and mitigating bot traffic

Sadly, there’s no simple way of getting rid of bad bot traffic from your website completely, but there are plenty of measures you can take in mitigating their impact.

It’s important to understand your traffic in order to measure your conversions and better know your audiences. Bad bot traffic threatens your figures and how to interpret them, but luckily there are plenty of solutions out there that are capable of breaking down your traffic streams and exploring just how many of those who access your pages are bad bots.

Platforms like Finteza are great at detecting bot traffic and mapping out exactly the types of bad bots that are interested in your site. These insights can work wonders if you’re looking at taking on a new marketing campaign and are looking at measuring your results.

A popular method of reducing the impact that bad bots have on pages is through introducing a CAPTCHA when requests are made. This method won’t get rid of all bad traffic, but it will certainly help to curb some nuisance bots.

It’s also possible to identify bots based on their signatures, which basically means the patterns that they’ve left in the past. Though this form of bot detection is fairly reliable, it’s less effective in highlighting new bots. To keep up with fresh bots entering the scene, you’ll need to update known bot signatures and create new ones based on your traffic analysis.