IBM is currently building a 120 petabyte “drive” – or storage repository – that will eventually comprise 200,000 conventional HDDs.

According to IBM rep Bruce Hillsberg, the gargantuan data container is expected to store approximately one trillion files – allowing the “drive” to house data required for complex simulations, such as weather and climate models.

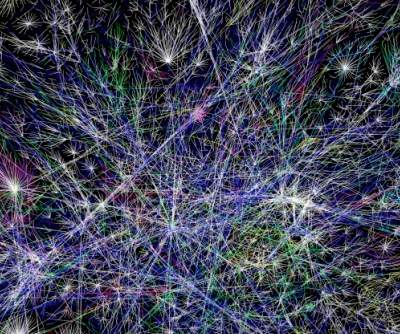

Indeed, the 120 petabyte drive will be capable of storing 24 billion five-megabyte MP3 files or 60 backup copies of the Web (150 billion pages) from the Internet WayBack Machine.

Although IBM is designing the drive for an unnamed client, the technology used to create the large repository could help facilitate the development of similar systems for more conventional commercial computing applciations.

“[Sure], this 120 petabyte system is on the lunatic fringe now, but in a few years it may be that all cloud computing systems are like it,” Hillsberg told MIT Technology Review.

Meanwhile, Steve Conway of IDC confirmed that IBM’s data container is significantly bigger than any other storage system he’s come across in the past.

“A 120-petabyte storage array would easily be the largest I’ve encountered,” said Conway, who noted that the largest arrays currently available weigh in at approximately 15 petabytes.

Unsurprisingly, IBM engineers refined a number of techniques to enable the seamless operation of such a large storage system, including cooling the drives with circulating water rather than fans, allowing a supercomputer to keep working at almost full speed even if a drive breaks down (by automatically writing data to a replacement) and running a fresh file system known as GPFS – which spreads individual files across multiple disks.