At VMworld Nvidia launched its GRID 2.0 bringing its Maxwell GPU to the product line. The Grid 1.0 used Kepler-based GPUs on the Tesla AIBs for VDI and was designed for rack-mounted servers. The new Tesla AIBs are for use in blade servers from a several suppliers such as Cisco, Dell HP and Lenovo.

Nvidia says the Maxwell GPU can be mounted on blade servers which will greatly increase compute density, and therefore the number of users that can be served. The company also claims this will more than double the performance of the Grid. And Linux has been added in addition to Windows which Nvidia says allows them to make the 2X claim across the board.

Nvidia claims its Grid 2.0 offer 2X everything and new capabilities for accelerated virtual desktops (Source Nvidia)

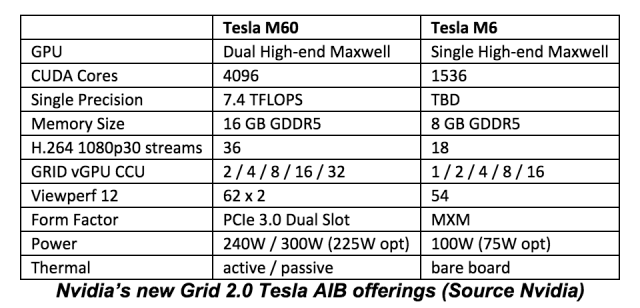

Nvidia says with the Maxwell M60 Tesla AIBs, they can double to the number of concurrent users up to 128 while keeping the same performance. Nvidia will actually offer two AIBs, the M60 with dual GPUs, and M6 with a single GPU.

The company says nearly a dozen Fortune 500 companies are completing trials of the Nvidia Grid 2.0 beta. Server vendors, including Cisco, Dell, HP and Lenovo, have qualified the Grid solution to run on 125 server models, including new blade servers. Nvidia said it has worked closely with Citrix on XenApp, XenDesktop, and XenServer, and VMware on Horizon, and vSphere.

Nvidia said the ability to virtualize enterprise workflows from the data center has not been possible until now due to low performance, poor user experience and limited server and application support. Nvidia GRID 2.0 integrates the GPU into the data center and clears away these barriers by offering a blade version, though its partners.

Nvidia says, if you, Mr, Customer, had any barrier (like server density) to deploying before, we pretty much gotcha covered now.

Blade server support: Enterprises can now run Grid-enabled virtual desktops on blade servers — not simply rack servers — from leading blade server providers.

What do we think?

Given Nvidia’s propensity to build complex high-density AIBs, and its willingness to assemble rack-mount systems, it’s a little surprising that they held back on building a blade.

However, blade servers have restricted I/O options so it’s not possible to get the same high CPU:GPU ratios you’d find in a high-performance computing scenario. Nonetheless, Nvidia thinks companies will employ the new Tesla AIBs in other jobs besides VDI. A blade with a tightly coupled GPU would make a powerful compute accelerator, and forget about the dweebs on the other end who want to see pretty pictures; they can sue Grid 1.0