Astronomers have made the most precise measurement ever of how the universe has cooled down during its 13.77 billion year history – putting the Big Bang theory to the test.

They studied molecules in clouds of gas in a galaxy 7.2 billion light years away – so far away that its light has taken half the age of the universe to reach us.

To make the measurement they used the CSIRO Australia Telescope Compact Array, an array of six 22-metre radio telescopes in eastern Australia.

“The gas in this galaxy is so rarefied that the only thing keeping its molecules warm is the cosmic background radiation – what’s left of the Big Bang,” says Sebastien Muller of Chalmers University of Technology and Onsala Space Observatory.

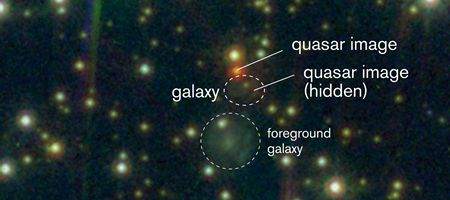

Taking advantage of a lucky alignment, the team measured light from an even more distant source behind the galaxy, a brightly-shining quasar known as PKS 1830-211.

“We analysed radio waves from the quasar which had passed through the gas in the galaxy. In the radio waves, we could identify traces of many different molecules,” says Muller.

Using a sophisticated computer model, the astronomers used these molecular signatures to measure the temperature in the gas clouds of the galaxy. The figure they came up with was 5.08 Kelvin (+/- 0.10 Kelvin) significantly warmer than today’s universe, which is 2.73 Kelvin.

“The temperature of the cosmic background radiation in the past has been measured before, at even larger distances,” says Alexandre Beelen, astronomer at the Institute for Space Astrophysics at the University of Paris.

“But this is the most precise measurement yet of the ambient temperature when the universe was younger than it is now.”

According to the Big Bang theory, the temperature of the cosmic background radiation should drop smoothly as the universe expands.

“That’s just what we see in our measurements,” says Mueller. “The universe of a few billion years ago was a few degrees warmer than it is now, exactly as the Big Bang theory predicts.”